Why augmented data quality and governance, not fully autonomous AI, are essential to trusted, scalable enterprise data.

The NYC Data Management Summit gathered about 400 industry leaders, practitioners, and innovators to explore how enterprises can keep pace with the demands of modern data management. One message came through loud and clear: data quality and governance can no longer be static, manual, or reactive.

Data quality must be augmented, intelligent, and adaptive to empower humans with automation and insight, rather than replace them. Meaningful data quality depends on keeping people in the loop, with platforms that surface issues, guide remediation, and continuously adapt alongside business and regulatory needs.

Key Takeaways from the NYC Data Management Summit

- Static approaches to governance are failing. Manual rules, periodic audits, and siloed committees can’t keep up with today’s data complexity, volume, and regulatory requirements.

- AI adoption raises the stakes for data quality. Enterprises are racing to scale AI initiatives, but without trusted, validated data, models are doomed to underperform and create much larger issues.

- Business and technical teams must unite. Data quality requires both technical expertise and business context. Without a shared language, governance remains a compliance exercise instead of a business enabler.

In a panel on redefining data management with AI, our CEO Gorkem Sevinc, spoke alongside data leaders from Bank of New York, BlackRock, and Google to discuss what enterprise data management teams need today in order to keep pace with the evolution and adoption of AI. Here’s what we heard:

Static Data Governance Is Failing

Enterprises are facing exponential growth in both the volume and complexity of their data. Traditional approaches of manual rules, quarterly audits, and siloed governance committees don’t scale and dramatically increase risk.

Several speakers highlighted the urgent need for new approaches:

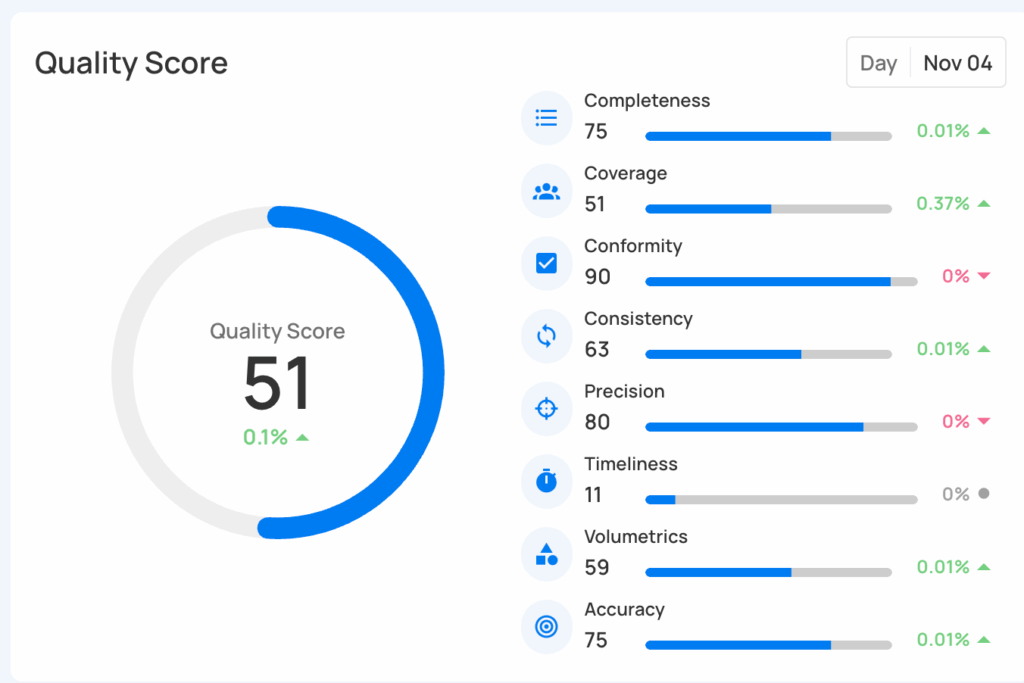

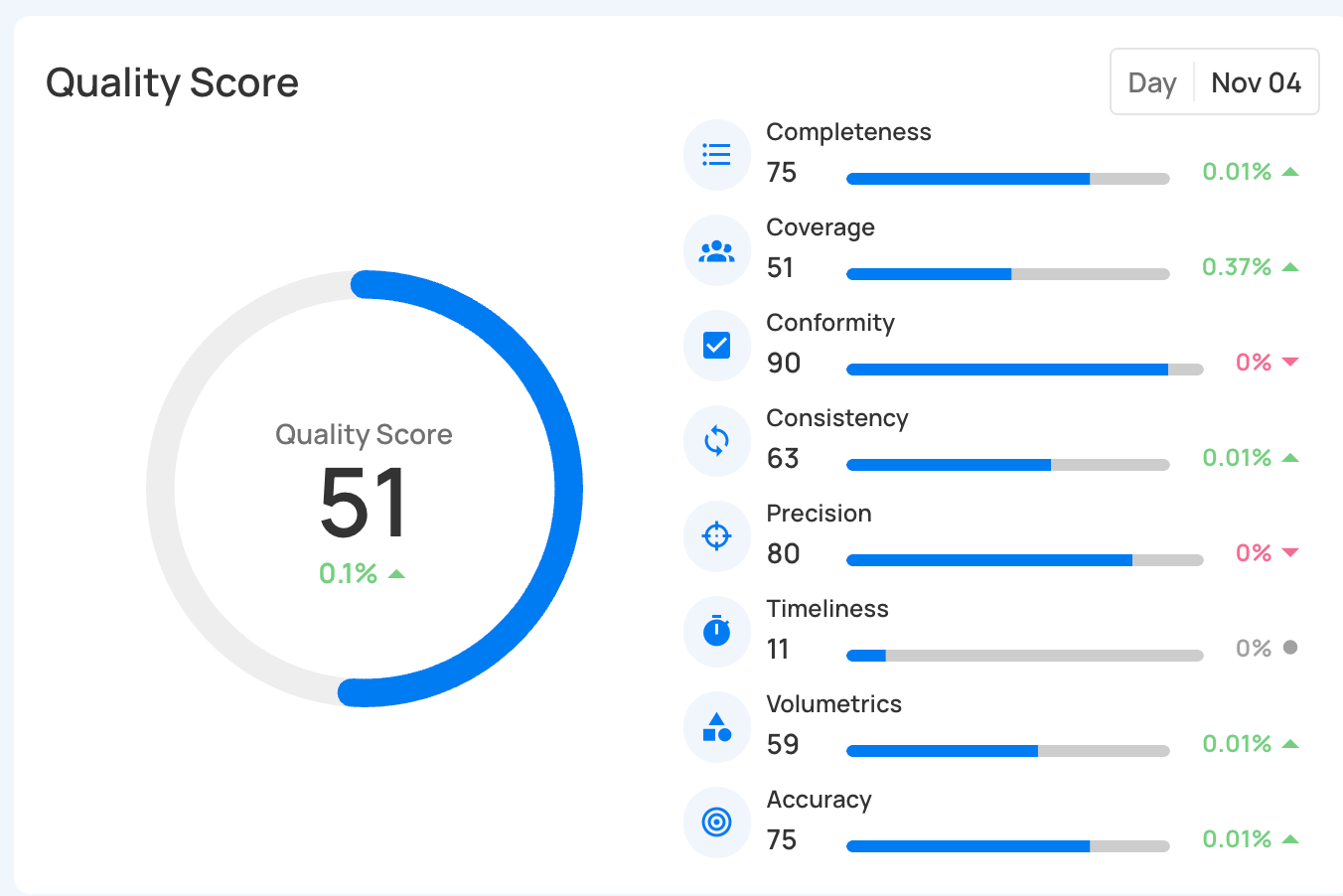

- Automation is essential. Profiling data across thousands of fields and hundreds of sources is impossible to maintain manually. Augmented data quality platforms that auto-generate and continuously adjust quality rules put time back in the hands of data teams and build higher levels of data confidence across the business.

- Data quality is the foundation of AI. As enterprises race to scale AI initiatives, untrustworthy data derails them before they start. The 1-10-100 rule of data quality is exacerbated exponentially by AI because it enables anyone within the organization to become a business analyst and generate new insights based on the data it has. Augmented data quality ensures projects begin with reliable inputs.

- Governance must be dynamic. Regulations like BCBS 239, GDPR, and HIPAA are moving targets. Augmented governance enables organizations to adapt policies and controls as needed to keep pace with the latest requirements and prevent issues before they arise.

Our CEO Gorkem Sevinc puts it this way: “Governance has to evolve at the speed of your business, not the speed of your committees.”

Reactive to Proactive is the Next Leap

A recurring theme throughout the summit was the shift from reactive to proactive approaches. The cost of discovering issues after they’ve already impacted customers, operations, or compliance is no longer acceptable.

Instead, augmented data quality platforms need to provide:

- Automatically generated rules to detect anomalies across systems, with the ability to drive downstream remediation using advanced workflows.

- Continuous learning and control improvements that adapt governance as business context, technical requirements, and regulations evolve.

- Centralized management of data quality that unlocks consistent enforcement, simplified governance, and seamless collaboration across business and technical teams.

Data quality is moving from a nice-to-have to a strategic imperative for businesses that need to deliver trusted data at scale. If you only discover bad data after it’s already in production reports, you’re not managing data — you’re managing fallout.

Uniting Business and Technical Teams is Crucial

Finally, one of the most discussed topics was that data quality is not just a technical challenge — it’s a business challenge.

Executives need issues explained in terms of customer impact, risk exposure, or revenue opportunity. Data engineers, on the other hand, require detailed diagnostics to help them remediate.

Augmented data quality solves for both of these groups by:

- Translating anomalies into business-friendly insights.

- Surfacing automated root cause analysis with full explainability for remediation.

- Creating a shared language of trust that both sides can act upon.

This alignment is what transforms governance from a compliance checkbox into a driver of business value.

As Gorkem says, “If you can explain a data issue in business language and fix it in technical language, you’ve solved the trust gap.”

The Road Ahead: Autonomation with Accountability

The conversations at the summit underscored a growing consensus: manual-heavy, reactive approaches can’t keep up with enterprise demands. The future lies in intelligent, augmented systems that combine automation with accountability and embed trust into every workflow, pipeline, and decision.

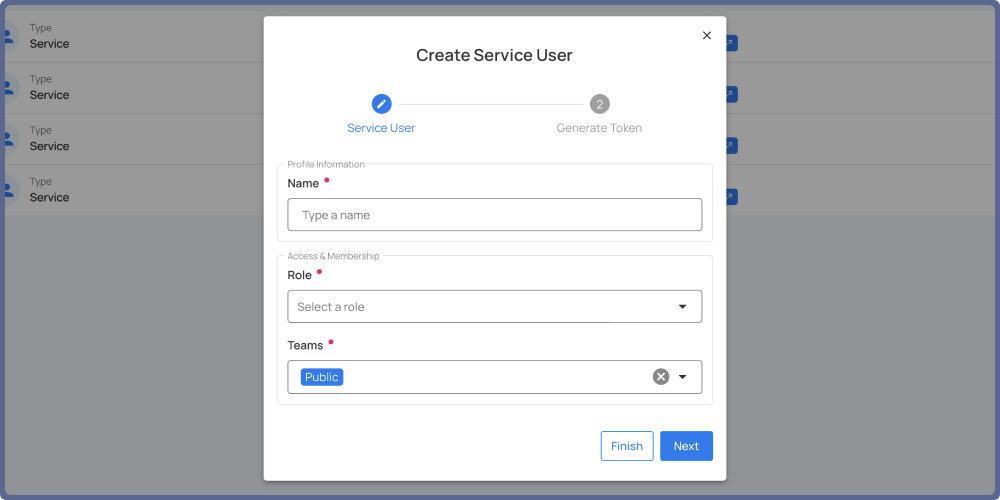

At Qualytics, we see this shift every day. Our platform helps enterprises:

- Accelerate AI initiatives with trusted, validated data.

- Reduce regulatory risk by making data quality adaptive and continuous.

- Eliminate the hidden costs of bad data before they impact operations or customers.

The takeaway for data leaders is clear: the future isn’t about adding more monitoring, it’s about augmenting data quality with automation and oversight — ensuring enterprises can instill deep