Take Control of Data Quality Before It Controls Your Business

Qualytics is the enterprise standard for augmented data quality, giving you proactive,

automated control of the data powering analytics, operations, and AI.

Data issues don’t wait for a convenient moment to surface.A quiet schema change, a mistyped value, or an outdated rule can cascade into broken dashboards, operational risk, incorrect financials, or AI models generating misleading insights because of bad inputs. Most tools alert you only after the damage has already spread.

Qualytics flips that model.

With automated profiling, augmented rule generation, continuous validation, and automated remediation workflows, Qualytics keeps data quality under control before data issues create business issues.

Don’t just observe your data. Control it.

How the Platform Works

Profile & Understand Data

Understand your data, day one.

Problem

Most enterprises are blind to data quality because their metadata is stale and static. Data

evolves significantly faster than manual queries: field-level profiles must reflect production

structure and behavior, not static governance metadata, for data quality to be effective at scale.

Without automation, teams spend weeks validating schemas, chasing variance, and relearning

the same patterns across every datastore. Changes slip in quietly and go unnoticed until

reporting, model inputs, or operations break downstream.

Visibility collapses. Blind spots multiply.

A large enterprise lakehouse can span 10,000 tables with about 60 columns each, fed by hundreds of ingestion pipelines. That is roughly 600,000 fields whose structure and behavior can change weekly or daily. Schema changes include data types, constraints, and nullability, along with distribution shifts reflected in profiling signals such as cardinality, ranges, percentiles, and histograms. Validating those changes with manual SQL at this scale would require dozens of engineers just to keep up. Most teams stay blind to how their data is evolving, until something breaks.

Solution

Qualytics gives teams a trustworthy day-one view of data structure and behavior.

The platform scans every container and field, then unifies structural and behavioral metadata into Field Profiles that establish a day-one baseline and refresh continuously as data changes. Teams find hidden issues in hours, not weeks, and prevent downstream rework. Segmented profiling breaks results out by tenant, region, segment, or any dimension, exposing issues that only surface in a slice of the data.

Automated Structural Profiling

Profile every record to identify field types, formats, nullability, cardinality, and structural patterns, giving you an accurate understanding of how data is really structured

Behavioral Baseline Generation

Learn expected behavior by observing distributions, ranges, volumes, and relationships over time, establishing historical norms that drive anomaly detection, and supports inferred rules and thresholds

Metadata Unification & Enrichment

Consolidate structural and behavioral metadata into unified Field Profiles and store them so teams can analyze trends and track data quality across systems over time

Group By Profiling (Multi-Tenant & Segmented Views)

Automatically break profiles down by tenant, region, segment, or any dimension you choose

“We have a 239M row consumer dataset with 4,000 columns, with a lot of hidden issues we didn’t know about until we automated data profiling.”

Create & Maintain Rules

Scale coverage without scaling headcount.

Problem

Manual rule management collapses at enterprise scale. Teams only add rules reactively after failures break something downstream. They patch the immediate issue and jump to the next firedrill. Coverage stays narrow because rule creation requires collaboration between business and data teams, which limits coverage to only the most critical elements. Rules are often rebuilt across tools with no central view of what is enforced where, increasing reactive management and leaving gaps.

Gaps widen. Incidents slip through.

In that same lakehouse, real coverage requires tens of thousands of quality rules, just for critical fields. If each rule takes about an hour to align with subject matter experts, implement, test, promote to production, and document, the upfront effort exceeds 30,000 engineer-hours. Thousands more hours are required every year as 10 to 20 percent of schemas and business definitions change. No organization can staff that. Coverage stays thin. Teams fall back into reactive rule management.

Solution

Qualytics delivers high rule coverage on day one, without manual overhead.

Proprietary AI models auto-suggest about 95 percent of rules needed from training on your actual data, delivering immediate coverage for advanced technical and foundational business rules. From there, business subject matter experts extend coverage with guided low-code and no-code rule authoring for sophisticated business logic, while engineers can still write full-code rules for highly specialized edge cases. Coverage and maintenance scale with change and configuration, not headcount.

Auto-suggested Rules

Advanced machine learning turns profiling metadata and observed behavior into suggested rules and thresholds for reliable day one coverage. You choose how deep to go with suggested rules by layer or dataset, because Bronze, Silver, and Gold layers require different quality dimensions to be prioritized. In Qualytics, that control is built in through a simple slider

Low/No-Code Rule Authoring

A guided low-code and no-code UI lets business and data teams co-own rule creation and maintenance without advanced SQL skills. Start fast with 50+ prebuilt, production-ready data quality rules spanning common patterns from simple validations to complex reconciliations

Reusable Templates

Turn any rule into a reusable template, then apply it across datasets and systems with a single, centrally governed configuration. This keeps standards consistent, prevents duplicate rule building, and ensures teams always know what is enforced where

Dry Run Validation

Test new or updated rules safely against production data before promotion. Dry runs show expected pass/fail rates and example anomalous records, helping teams calibrate thresholds and avoid noisy alerts in production

“I have two people full-time manually writing and maintaining over a thousand rules, which is inefficient and not scalable for our business.”

Monitor & Validate Quality

Move from manual checks to continuous validation.

Problem

Even with profiling and rules in place, batch monitoring leaves the business exposed. Tools that validate once a day detect issues hours after bad data has already propagated into dashboards, AI models, and business processes. Detection can lag 30+ hours behind the incident. Data keeps changing and quality validation must keep up through continuous human-in-the-loop monitoring and proactive anomaly detection.

Detection lags. Issues spread.

A lakehouse often uses a medallion architecture, processing hundreds of millions of events per day from bronze to silver to gold. Hundreds of transformations compound risk. Even a 1–2 percent weekly regression rate creates join mismatches, casting errors, and logic changes. One small change in bronze can ripple into incorrect metrics in gold, with no hard failure to flag it.

Solution

Qualytics detect anomalies continuously, before issues spread downstream.

The platform monitors structural and behavioral signals as data moves through stages of pipelines and schemas, tracking schema drift, volumes, key metrics, and distributions in near real time. Issues are detected closer to the source and inside pipelines, before bad data is stored or spreads downstream. Teams see what changed, where it changed, and which downstream assets are at risk. Human review of anomalies closes the loop, and feedback continuously improves detection over time.

Anomaly Lifecycle Management

Track anomaly status, ownership, severity, and resolution metadata in one place, creating a clear operational record from detection through closure

Time-Series Monitoring

Track metric and volume trends over time and alert on deviations from historical norms, surfacing partial loads, spikes, regressions, or outages that row-level checks miss

Human-in-the-Loop Anomaly Feedback

Stewards review anomalies, label outcomes, and provide feedback that continuously refines rules and detection through supervised learning over time

UI and API-enabled Monitoring

Enable collaborative monitoring by managing configuration, validation runs, and anomaly workflows through a unified UI or programmatically via APIs, helping teams operate the way they work best

“The team currently relies on visual tracing tools for root cause and impact analysis downstream but the lack of an automated, scalable process is a significant financial risk.”

Notify & Remediate Issues

Turn alerts into prioritized responses.

Problem

Even when issues are detected, many go unresolved because alerts arrive too late or without context. Alerts get misrouted, lack ownership, or fail to explain impact, forcing teams to spend hours figuring out what broke and who owns the fix. Without clear, automated paths for triage and remediation, high-risk incidents get buried in low-value noise. Teams react after bad data has already propagated, fix symptoms instead of root causes, and watch the same failures repeat.

Alert fatigue grows. Trust erodes.

Hundreds of rules can generate thousands to millions of alerts per day across multiple tools. Many are redundant, lack ownership, or provide no impact context. Teams re-run analysis to find offending records while noise buries the critical issues. Alerts bounce between engineering and subject matter experts with no clear path to resolution. By the time an issue is remediated, it is often too late.

Solution

Qualytics turns alerts into prioritized remediation before data issues become business issues.

Every alert includes ownership, severity, impact, and explainable context that pinpoints what changed and the exact offending records. No-code Flows route incidents to the right stewards and can trigger remediation workflow through Jira or ServiceNow, or notify teams in Teams or Slack. Flows can also halt data propagation in pipelines by pausing jobs or gating promotion steps. Actions and affected records are captured in the Enrichment Datastore, creating a governed audit trail that speeds resolution and reduces risk.

Ownership and Accountability

Assign clear owners and lifecycle status to each anomaly giving teams clarity on who is responsible, who is working on it, and what is resolved

Impact Context

Enrich anomalies with explainable context, including anomaly weight, failed rules, and affected source records, making severity clear and preventing critical issues from getting buried in noise

Automated Remediation

Orchestrate governed remediation through Flows and integrations, storing affected records and resolution context in the Enrichment Datastore to create an auditable trail from anomaly to fix

“Alerts don't matter if we're not getting the right people to proactively handle the anomaly and remediate the issues.”

Frequently Asked Questions

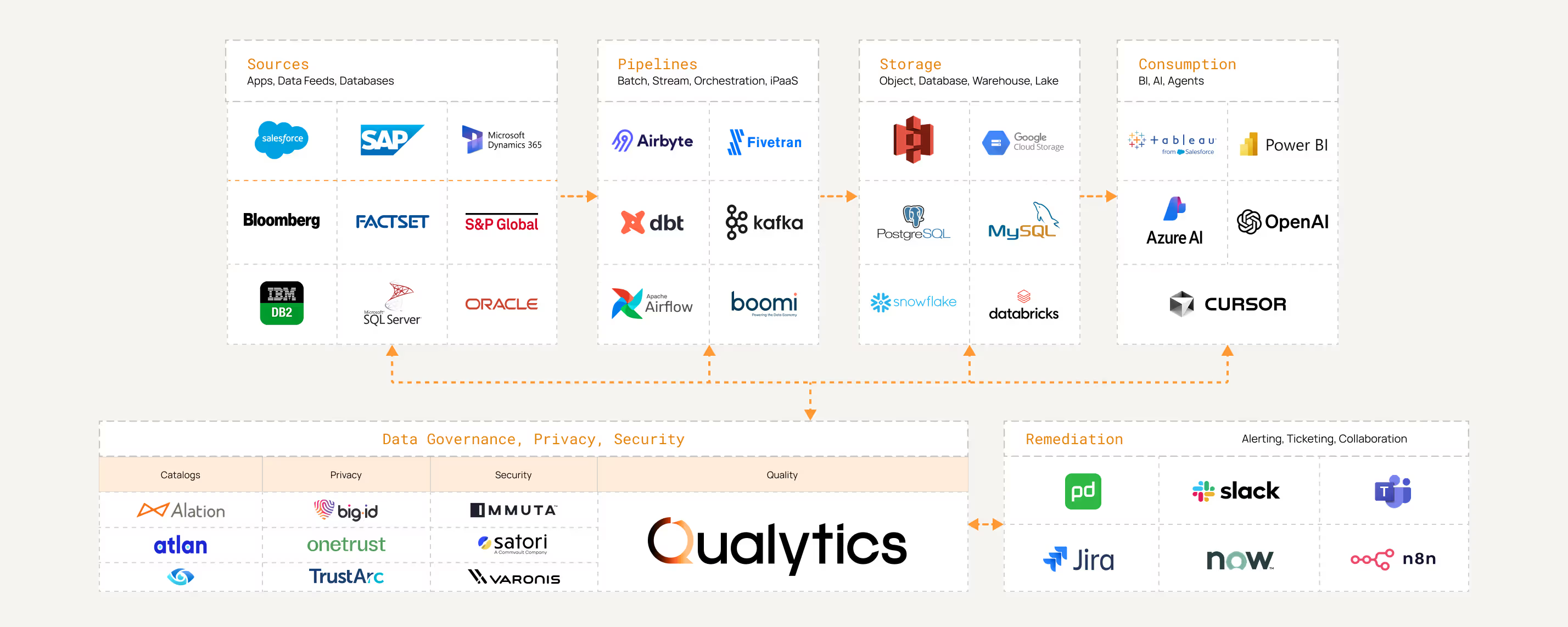

Qualytics supports any SQL data store and raw files on object storage. Popular data warehouses such as Snowflake, Databricks, Redshift; popular databases such as MySQL, PostgreSQL, Athena, Hive, along with CSV, XLSX, JSON and other files on AWS S3, Google Cloud Storage and Azure Data Lake Storage are a few examples of supported data stores. Users can also integrate Qualytics to their streaming data sources through our API.

Qualytics is architected with enterprise-grade scale in mind, and built on Apache Spark and deployed via Kubernetes. Through vertical and horizontal scalability, Qualytics meets enterprise expectations of high volume and high throughput requirements of data quality at scale.

Qualytics never stores your raw data. Raw data is pulled into memory for analysis and subsequently destroyed – anomalies identified are written downstream to an Enrichment datastore maintained by the customer. Highly-regulated industries may choose to deploy Qualytics within their own network where raw data never leaves their network and ecosystem.

A dedicated Customer Success Manager, with mandatory weekly check-ins.

Qualytics supports both modern solutions and legacy systems:

Modern Solutions: Qualytics seamlessly integrates with modern data platforms like Snowflake, Amazon S3, BigQuery, and more to ensure robust data quality management.

Legacy Systems: We maintain high data quality standards across legacy systems including MySQL, Microsoft SQL Server, and other reliable relational database management systems.

For detailed integration instructions, please refer to the quick start guide.

Qualytics automatically profiles data, infers quality rules and thresholds, detects anomalies, initiates remediation workflows, and adapts to data changes without requiring manual reconfiguration.

Yes, Qualytics’s metadata export feature captures the mutable states of various data entities. You can export Quality Checks, Field Profiles, and Anomalies metadata from selected profiles into your designated enrichment datastore.

Quality Scores measure data quality at the field, container, and datastore levels, recorded as a time series to track improvements. Scores range from 0-100, with higher scores indicating better quality.

Ready to take control of your data quality?

Learn how to automate and scale anomaly detection across your data environment.