Managing the quality of your data is crucial for accurate decision-making and operational efficiency, which is why implementing data quality management is essential. Poor data quality can lead to costly mistakes and missed opportunities. In this article, we will explore effective strategies and key practices for maintaining high data quality management standards within your organization.

Key Takeaways

- Data Quality Management (DQM) is essential for ensuring data accuracy, reliability, and compliance, forming the backbone of effective decision-making.

- Key components of DQM include data profiling, cleansing, and monitoring, which help identify and rectify data issues while ensuring compliance over time.

- Establishing clear data quality rules, objectives, and leveraging modern tools are vital for effective DQM, enhancing operational efficiency and supporting informed business decisions.

Understanding Data Quality Management

Data quality management (DQM) is the backbone of effective data management, ensuring that the data you rely on is accurate, reliable, and fit for purpose. Without high-quality data, organizations risk making uninformed decisions that can lead to costly mistakes and missed opportunities. The essence of DQM lies in its continuous cycle of monitoring, evaluating, and improving data to meet organizational needs.

Key dimensions of data quality include:

- Completeness

- Coverage

- Conformity

- Consistency

- Precision

- Timeliness

- Accuracy

- Volumetrics

These dimensions serve as benchmarks to measure the quality of your data. For instance, timeliness ensures that data is current and accessible when needed, while accuracy checks if the data correctly represents real-world conditions. Understanding these data quality dimensions helps organizations set standards and expectations for their data quality.

Data quality management is an ongoing process demanding continuous effort and refinement to maintain high standards. High-quality data is crucial for long-term strategic planning and informed decision-making, enabling organizations to adapt to changing market conditions and drive innovation. Investing in DQM ensures that business data remains a valuable asset rather than a liability.

Key Components of Data Quality Management

Effective data quality management involves several key components: data profiling, data cleansing, and data monitoring. Each of these components plays a vital role in maintaining and improving the quality of data.

Data profiling is the initial diagnostic stage that examines data structure, irregularities, and overall quality, setting the stage for subsequent cleansing and monitoring efforts. Data cleansing follows, focusing on correcting or eliminating detected errors and inconsistencies.

Lastly, data monitoring provides continuous visibility into the state of data, ensuring that it remains compliant with established standards and expectations over time. Implementing these components is crucial for a robust data quality management framework.

Add Your Heading Text Here

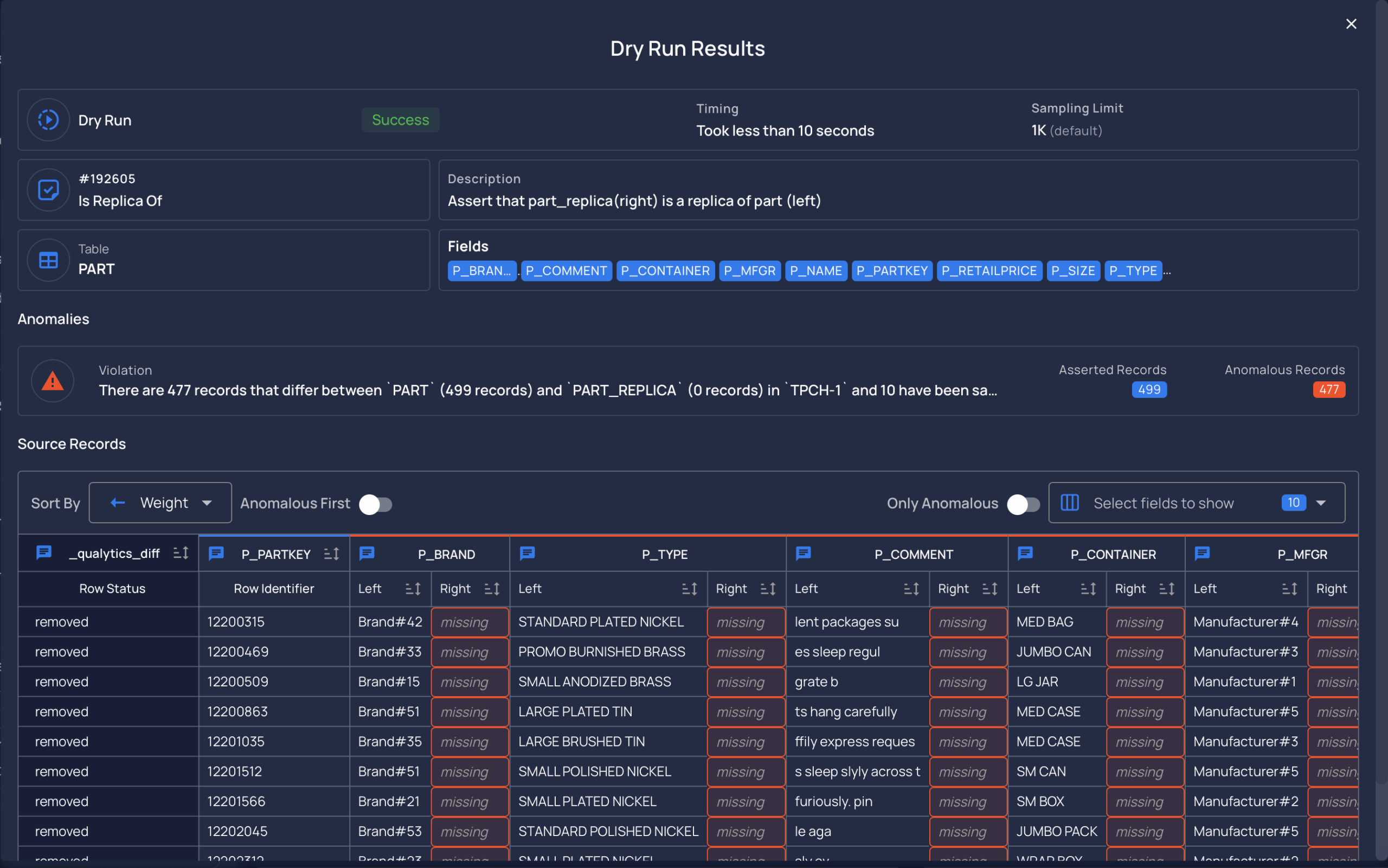

Data profiling is the first step in the data quality management process. It involves examining the data’s structure, content, and relationships to identify errors, inconsistencies, and establish a calibration for being fit for purpose.

Analyzing data against statistical measures, data profiling offers a clear view of current data quality and sets benchmarks for improvements. This diagnostic stage is crucial for identifying outliers and inconsistencies, which can then be addressed through data cleansing.

The insights gained from data profiling are invaluable for setting standards, expectations and measuring data quality KPIs. It helps data architects and analysts understand the historic data, its shapes and patterns, and the quality issues that help calibrate for fit.

Effective data profiling tools analyze data structure and integrity, providing a foundation for subsequent data quality management activities.

Anomaly Detection

Data cleansing is the process of correcting or eliminating detected errors and inconsistencies in data. This step is essential for enhancing data accuracy and ensuring that the data is fit for analysis and decision-making. Common activities in data cleansing include removing duplicates, correcting errors, and standardizing formats.

Employing various tools and techniques significantly improves data accuracy and completeness, paving the way for reliable insights and informed decisions.

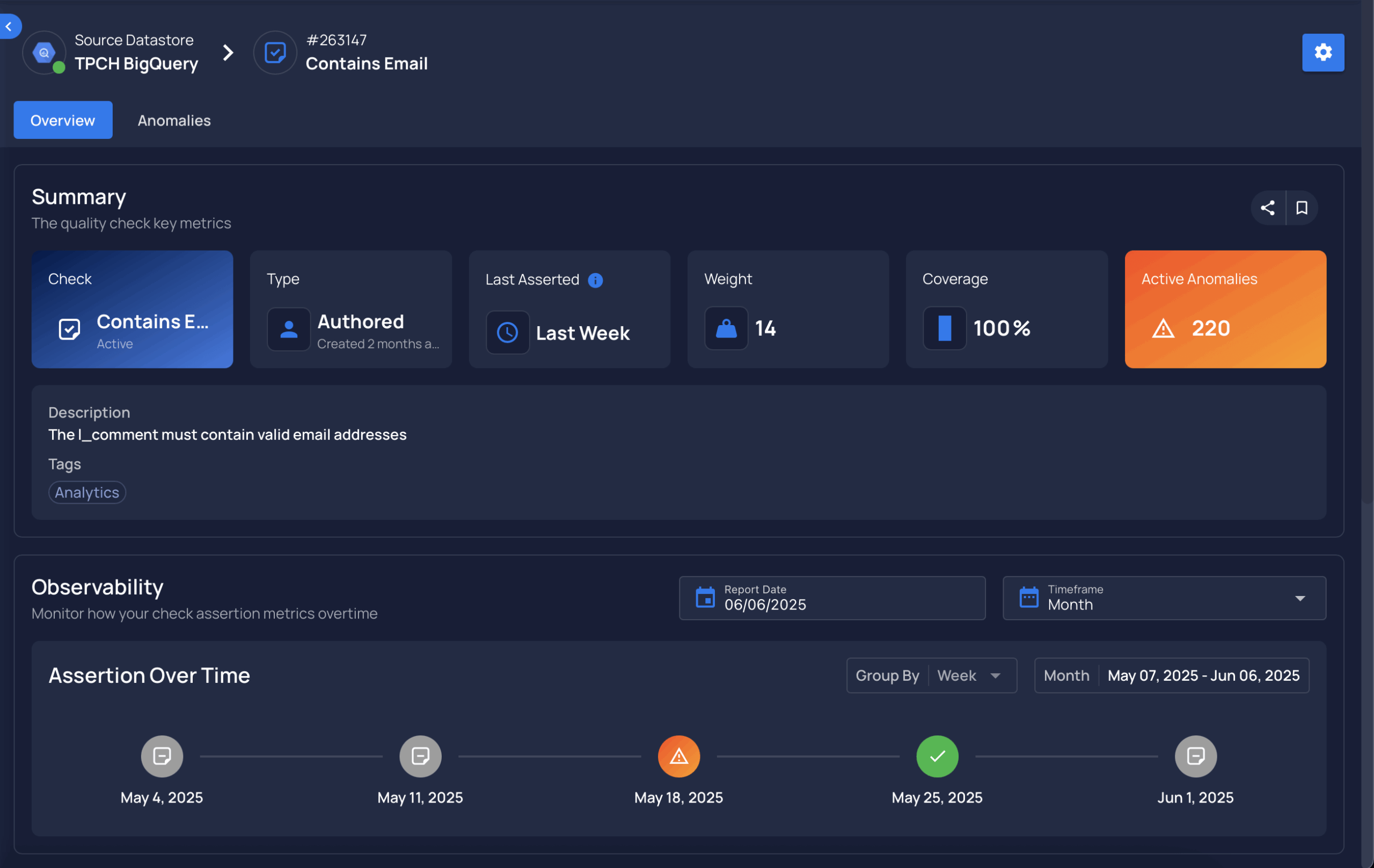

Continuous Improvement through Monitoring

Continuous data monitoring is crucial for maintaining data quality over time. It involves regularly assessing data against established standards to ensure compliance and prevent deterioration. Data monitoring provides real-time visibility into the state of data, allowing organizations to quickly address anomalies and quality issues as they arise. This proactive approach is essential for effective data governance and long-term data quality management.

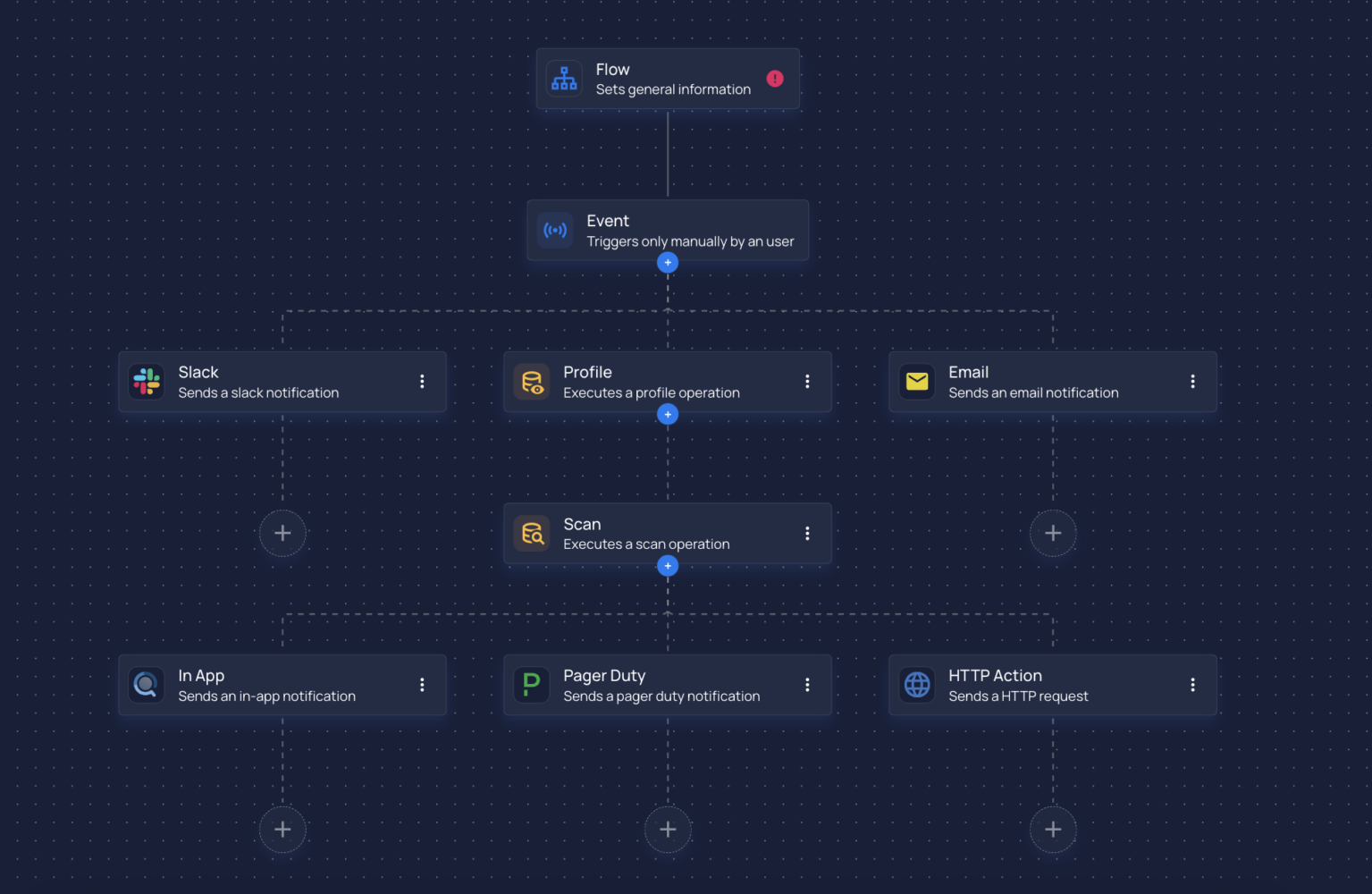

Advanced monitoring systems can detect anomalies in real-time, triggering alerts to address quality issues promptly. This saves time and human resources by automating quality checks and enabling organizations to move from reactive to proactive data quality management.

Continuous monitoring ensures that the data remains accurate and reliable, supporting data-driven initiatives and business operations.

Implementing a Data Quality Management Strategy

A solid strategy for data quality management is essential for effective governance and ensuring the accuracy and reliability of data assets. The first step in implementing a data quality management strategy is assessing the current state and identifying data quality needs. This involves evaluating existing data, understanding quality issues, and determining their impacts.

Once the assessment is complete, a phased implementation plan can be developed. This plan breaks down the implementation into manageable phases with specific objectives, reducing risks and allowing for course corrections.

Training employees on how to use data quality tools and continuously monitoring data quality metrics are critical for maintaining accuracy and consistency.

Defining Data Quality Rules

Clear data quality rules are essential for ensuring compliance and supporting analytics. These rules should align with the organization’s business goals and be regularly reviewed to ensure they meet evolving business requirements.

By defining and adhering to these rules, organizations can maintain high data quality standards and support effective data management.

Setting Objectives and Metrics

Establishing specific objectives and measurable metrics is vital for guiding and assessing the effectiveness of data quality initiatives. Implementing KPIs for data quality allows organizations to evaluate performance, identify areas for improvement, and ensure that data quality efforts are aligned with business goals.

Leveraging Data Quality Tools

Modern data quality tools enhance the DQM process by automating tasks and facilitating effective monitoring of data integrity. These tools can significantly reduce manual errors and improve efficiency through automated data cleaning and profiling processes.

Deployment options for data quality tools include on-premises, cloud, or hybrid solutions, depending on business needs. Tools like Qualytics offer a suite of enterprise-grade features for data quality monitoring and management, ensuring data is accurate, consistent, reliable, and compliant with standards.

Leveraging these tools enables organizations to proactively manage data quality and support data-driven decision-making.

Roles and Responsibilities in Data Quality Management

Data Quality and ultimately data governance cannot only be the responsibility of data engineers; Subject Matter Experts (SMEs) within business teams collaborate with data engineers to ensure the calibration for fit is done effectively and reflects real-world expectations.This collaborative approach is essential for implementing and maintaining robust data quality management practices.

Data Governance ultimately has the goal of bringing people, process and technology together to ensure data is usable and has integrity. As a major pillar of data governance, data quality management at scale through a data quality / management framework is crucial to enable measurement, management and continuous improvement of data quality.

Clearly defining roles and responsibilities fosters a culture of data quality and drives continuous improvement within organizations.

Data Governance Board

The data governance board plays a crucial role in overseeing the data quality strategy and policy framework. This board is responsible for approving budgets, monitoring compliance, and evaluating the performance of data quality initiatives. A data governance policy established by the board ensures consistent and standardized management of data quality across the organization.

This policy serves as a reference for audit trails, compliance checks, and future adjustments to data practices.

Chief Data Officer (CDO)

The Chief Data Officer (CDO) oversees data management and quality within the organization. Working closely with the data governance board, the CDO ensures that data initiatives are effectively managed and aligned with business goals. This alignment supports strategic objectives and enhances data-driven decision-making.

The CDO’s role is pivotal in integrating data quality efforts across the organization, fostering a culture of data excellence.

Data Stewards

Data stewards are subject matter experts responsible for managing data quality within their respective domains. They define data quality rules, monitor metrics, and remedy anomalies as they arise. Data stewards play a critical role in evaluating data against quality metrics such as accuracy, completeness, and consistency. After identifying issues, they report their findings to data governance bodies or relevant stakeholders, ensuring that quality standards are maintained.

Additionally, data stewards act as liaisons between business and technical teams. They ensure that data quality management processes are effectively implemented and that data remains accurate and reliable. By bridging the gap between business needs and technical solutions, data stewards help organizations achieve high data quality standards and support effective data management practices.

Addressing Data Quality Challenges

Addressing data quality challenges is a critical aspect of data management. Organizations often face issues such as:

- Processing large volumes of data quickly while maintaining quality

- Manual data cleansing and reconciliation, which can be costly and time-consuming

- To overcome these challenges, robust systems and processes are essential to maintain data quality in real-time environments.

The financial impact of poor data quality can be severe, with businesses losing millions annually due to data-related errors. Data quality tools play a vital role in managing and protecting personal information, aiding in regulatory compliance. By addressing these challenges proactively, organizations can improve operational efficiency and ensure that their data remains a valuable asset.

Managing Large Data Volumes

Managing large data volumes presents several challenges, including the quantity of data, the rate of generation, and the variety of sources. Organizations often face difficulties in effectively managing vast amounts of data, which can impact its quality and utility for decision-making. Technical issues related to transitioning from legacy systems can further complicate large data volume management.

Master Data Management (MDM) frameworks are essential for overcoming recurring data quality issues associated with large data volumes. By establishing clear data entry guidelines and standardizing unit measurements, organizations can address conformity challenges and ensure consistency across their data.

Metadata management assists in integrating data from various sources, ensuring compatibility and cohesiveness.

Ensuring Data Accuracy and Consistency

Ensuring data accuracy and consistency is crucial for effective data management. Common issues such as manual entry errors, discrepancies in sources, and outdated information can hinder data accuracy and consistency. Poor data quality can lead to erroneous conclusions. This may result in costly mistakes that damage an organization’s credibility. Implementing data matching and deduplication tools can help identify and eliminate duplicate records, maintaining data integrity.

Regularly reviewing and updating data is essential for maintaining accuracy. For example, ensuring address data reflects current postal codes can prevent inaccuracies and improve data reliability.

Root cause analysis is also critical for diagnosing data quality issues and correcting underlying problems affecting data consistency. Adopting these practices enhances data accuracy and supports effective decision-making.

Integration of Diverse Data Sources

Integrating diverse data sources is a common challenge in data quality management. Self-service registration sites, CRM applications, ERP applications, and customer service applications all contribute to customer master data.

Effective integration of diverse data sources is essential for maintaining reliable data and supporting comprehensive data analysis.

Measuring Data Quality

Measuring data quality is essential for assessing the effectiveness of a data quality management framework. Key metrics serve to measure progress and provide insights into the current state of data quality. Regular checks are performed to measure the framework against established metrics. These evaluations help determine if the framework is effective or requires adjustments. Basic measurements, such as validity checks, assess whether data conforms to specified formats or rules. Validation techniques help ensure that the data collected is accurate and meets predefined standards.

Defining and tracking data quality metrics allows organizations to identify areas for improvement and maintain high data quality standards. By regularly monitoring these metrics, businesses can ensure that their data remains accurate, reliable, and fit for purpose. This proactive approach to measuring data quality supports continuous improvement and effective data management.

Completeness

The completeness metric measures the availability of all necessary data in records. It is determined by checking if every data entry is complete with no missing fields. A simple method to measure completeness is counting the number of empty values within a dataset.

Regular audits and updates of data are essential to ensure it meets completeness standards and supports reliable analysis and decision-making.

Accuracy

Accuracy in data quality management evaluates if the data truly represents the real-world entity. It ensures that the information is reliable and valid. Organizations can enhance data accuracy by implementing validation rules. Additionally, they can cross-reference information with trusted data sources. The ratio of data to errors is a vital metric that indicates the level of data accuracy over time.

By ensuring data validity through automated checks and validation rules, businesses can maintain high data accuracy standards and support informed decision-making.

Consistency

Consistency in data quality management ensures that two data values from different datasets do not contradict each other. It measures uniformity across the dataset and alignment with standards. For example, the sum of employees in each department should not exceed the total number of employees, ensuring data consistency.

Regular consistency checks help maintain reliable data and support effective data management practices.

Benefits of Robust Data Quality Management

Robust data quality management offers numerous benefits, including improved decision-making, enhanced operational efficiency, and regulatory compliance. Accurate and reliable data is essential for making informed decisions. It helps reduce errors and prevents costly mistakes. High-quality data supports risk management by enabling accurate threat analysis and effective response. In industries like finance and healthcare, the stakes are high, making high-quality data particularly important for business success.

A robust data quality management model provides a significant competitive advantage to organizations. It enhances the potential for businesses to avoid costly errors, maximize revenue opportunities, and maintain a strong reputation. By investing in data quality management tools and practices, organizations can ensure that their data remains a valuable asset and supports long-term strategic goals.

Improved Decision-Making

High-quality data significantly enhances decision-making by providing accurate and reliable insights. Accurate data gives a clearer understanding of daily operations, aiding efficient decision-making and preventing disastrous situations related to cash flow issues. Data quality tools maintain data integrity and support informed decisions, helping organizations analyze trends, predict outcomes, and make strategic decisions.

Leveraging accurate data helps businesses avoid costly errors and achieve better outcomes.

Enhanced Operational Efficiency

High-quality data leads to more efficient business processes, higher ROI, and lower costs. Reliable data allows organizations to deliver better personalization and service to customers, fostering trust among stakeholders and enhancing the organization’s reputation.

Data quality management helps organizations avoid unnecessary expenses caused by mismanaged data, reducing the time spent on error correction and facilitating smoother business operations.

Regulatory Compliance

Implementing effective data quality measures is essential for organizations to adhere to regulations like GDPR and avoid heavy penalties. Compliance and risk management are supported by effective data quality management procedures, ensuring that data is accurate, reliable, and meets regulatory standards.

Tracking data lineage is crucial for data compliance, providing insights into data origins and transformations, and ensuring transparency in data management.

Best Practices for Data Quality Management

Adopting best practices for data quality management is essential for maintaining high standards and ensuring reliable data. A strong data quality governance framework enhances operational efficiency by minimizing errors and inconsistencies. Data stewards play a vital role in enforcing data usage and security policies, collaborating with various teams to establish common standards for managing data across the enterprise. Regular audits of data are necessary to identify inconsistencies and ensure high data quality.

Technological advancements influence data collection, storage, and consumption, requiring adaptation of DQM strategies. Data quality management strategies must safeguard data integrity, adapt to changing technologies, and foster a continuous learning culture. Following established guidelines and best practices helps organizations streamline processes, save time and costs, and maintain high data quality standards.

Fostering a Data Quality Culture

Fostering a data quality culture within an organization is crucial for sustaining high data quality standards. Data literacy among employees, achieved through training programs, workshops, and communication initiatives, ensures that all stakeholders understand their role in the data quality framework.

Effective training solidifies the human element of the framework, encouraging individuals and teams to value accurate data. Recognition and rewards play a vital role in promoting a culture of data quality and continuous improvement.

Continuous Improvement

Continuous improvement is a cornerstone of effective data quality management. It involves an ongoing process of reviewing data for anomalies and ensuring it meets quality standards. Setting specific, measurable objectives and metrics is critical for guiding data quality efforts and tracking progress toward continual improvement.

By establishing a Data Governance Framework, organizations can ensure consistent handling of data, enhance quality, and support ongoing improvement initiatives.

Data Governance Frameworks

A robust data governance framework includes data policies, data standards, KPIs, business rules, and organizational structures. Key roles typically involved in a data governance framework are data owners, data stewards, and data custodians.

The data governance board plays a key role in establishing standards for data quality and ensuring compliance across the organization. Incorporating data governance frameworks allows organizations to manage data quality activities effectively and ensure consistent data management practices.

Summary

In conclusion, effective data quality management is essential for ensuring that data is accurate, reliable, and fit for purpose. By understanding the key components of data quality management, implementing robust strategies, defining clear roles, and leveraging advanced tools, organizations can maintain high data quality standards. The benefits of robust data quality management include improved decision-making, enhanced operational efficiency, and regulatory compliance. By adopting best practices and fostering a culture of continuous improvement, businesses can ensure that their data remains a valuable asset and supports long-term strategic goals.

Frequently Asked Questions

What is data quality management (DQM)?

Data quality management (DQM) ensures your data is accurate, reliable, and suitable for your needs by continuously monitoring and improving it. It’s all about making sure you can trust the data you’re using!

Why is data profiling important in DQM?

Data profiling is crucial in Data Quality Management because it uncovers errors and inconsistencies within your data. By doing so, it lays the groundwork for effective data cleansing and ongoing quality monitoring, helping you maintain high data standards.

How do data quality tools enhance data management?

Data quality tools significantly enhance data management by automating tasks like profiling and cleansing, which boosts efficiency and minimizes manual errors. This leads to more accurate, consistent, and reliable data that meets compliance standards.

What role does the Chief Data Officer (CDO) play in data quality management?

The Chief Data Officer (CDO) plays a vital role in overseeing data management and quality, aligning these efforts with business goals and ensuring that data-driven decision-making is supported. Their leadership helps to maintain high standards for data quality throughout the organization.

How can organizations foster a culture of data quality?

To build a culture of data quality, organizations should implement training programs and workshops that highlight the value of accurate data. Recognizing and rewarding efforts in maintaining high standards can really motivate teams to prioritize data quality.