For many Chief Data Officers, the mandate has never been broader or more unforgiving. Fellow executives demand reliable data to power AI initiatives. Risk committees want assurance that compliance standards are being met. And boards ultimately expect measurable ROI from years of investment in data governance.

73% of executives are unhappy with their data quality, and 48% admit they’ve made decisions based on bad data within the past six months (Capgemini).

The problem isn’t effort. Most large enterprises have invested heavily in governance frameworks, lineage tracking, and observability. Yet the confidence gap remains. The irony of enterprise data quality is that the more structure you add, the slower you move. Every control introduces new complexity, every sign-off another delay. And still, the same question lingers: is our data quality good enough? Are we covered?

Why Reactive Data Quality Persists

Reactive data quality drains resources and erodes trust. So why does it continue?

The issue is firstly foundational. We wait for things to break before we prioritize addressing. If a data asset hasn’t had known anomalies with it, the human inclination is to think “it’s good enough.”

The second issue is largely organizational. Many Fortune 2000 companies are structured in silos, with fragmented ownership and competing priorities. Data teams focus on technical accuracy and uptime; business teams focus on context and outcomes. Governance often lives between them, defining policies but limited in ability to enforce consistent behavior.

As a result, quality problems are addressed after they surface. A business user spots the issue, an engineer tracks it down, and an analyst writes a rule to patch it. Each fix is valid but isolated. The system never learns. This reactive process relies on humans noticing, triaging, and fixing issues one by one—a cycle that doesn’t scale when data volumes and AI initiatives multiply the number of “spotters” and “fixers” overnight.

Capgemini’s survey suggests that poor data quality can erode 15–25% of revenue in more extreme cases.

The firefighting continues because incentives, tools, and accountability are disconnected. And for most companies today, the speed and breadth at which their data quality can be improved is largely limited to human speed, breadth, and imagination.

The Stakes Are Continuously Rising

The enterprise data landscape has outgrown traditional governance. Multi-cloud and on-prem systems coexist. Mergers introduce inconsistent architectures. AI projects demand data that is explainable and traceable from source to model.

The 1:10:100 rule, introduced by George Labovitz and Yu Sang Chang in their 1992 paper, illustrates the compounding cost of poor data.

- $1 to correct an anomaly at the source

- $10 to remediate an anomaly once detected

- $100 when a bad decision is made from a bad data point

- Up to $1 million (figuratively) when flawed data influences automated or AI-powered insights

This framework captures how bad data multiplies in impact as it flows downstream. Imagine a mislabeled product price propagating through an e-commerce system. It costs nearly nothing to catch that typo at the source. But if it makes its way into a sales report, an ERP feed, or an AI demand forecast, the fallout can be enormous—lost revenue, inaccurate pricing decisions, and distorted machine learning models. (Remember the Lyft CFO who typo’d an extra zero into an earnings report and caused a massive market meltdown?)

Gartner estimates that poor data quality costs organizations an average of $12.9 million per year, a number that’s ballooning as AI scales decision-making across the enterprise. When AI models depend on unverified inputs, the risks become exponential financially, reputationally, and operationally.

What Augmented Data Quality Really Means

Automation alone doesn’t solve the quality problem. Most enterprises already have some level of monitoring, usually in the form of simple dashboards that track pipeline failures and schema drift. What they lack is continuous learning. Traditional DQ systems require manual rule creation after each incident. Augmented approaches invert this: the system observes patterns, proposes rules, and adapts as data evolves. Instead of data engineers writing thousands of validation rules, they instead focus on reviewing and refining hundreds of auto-generated, mathematically accurate suggestions.

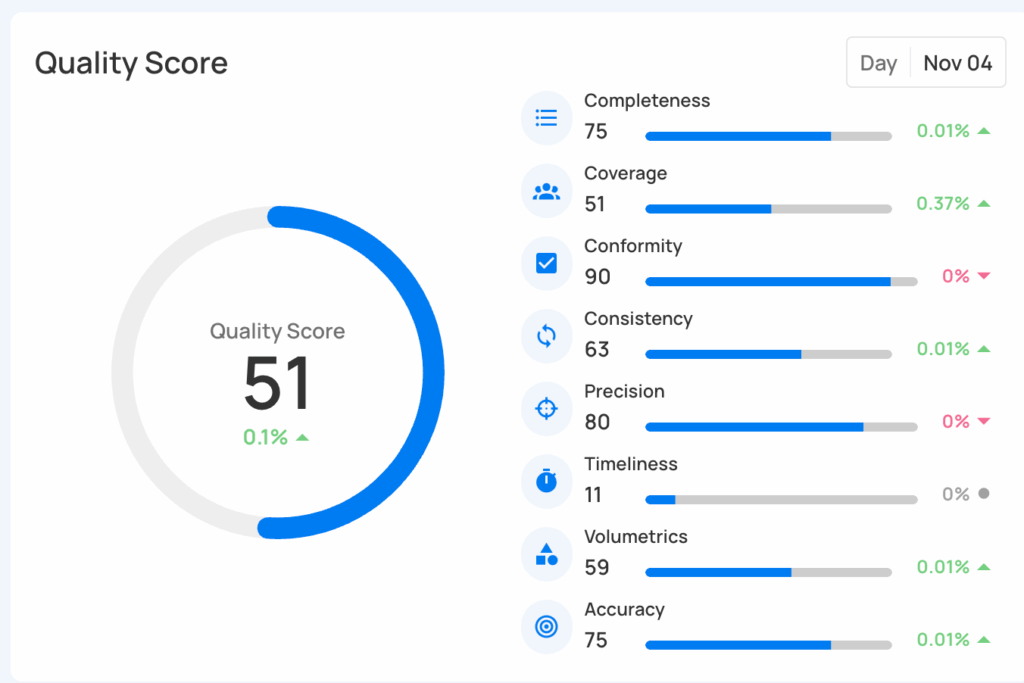

Augmented Data Quality combines automated detection with human judgment and contextual understanding. Automation scales the coverage, but interpretation still requires business context. At its core, augmented data quality is continuous, explainable, and collaborative:

- Continuous because it monitors and learns across data lifecycles rather than reacting to incidents.

- Explainable because every anomaly can be traced back to a rule that traces back to the shapes and patterns of the data asset(s), as well as its source and an owner.

- Collaborative because quality depends on both technical integrity and business logic.

Technical accuracy is not enough on its own. Business teams know whether a metric makes business sense; data teams ensure that it’s calculated correctly. Augmented Data Quality unites those perspectives through shared accountability.

Data Quality Across Complex Environments

Hybrid infrastructure compounds the challenge. Many enterprises operate with decades-long investments in on-prem systems alongside multi-cloud environments. Data flows through platforms that were never designed to speak the same language.

Manual data quality processes break down in this setting. Each environment has its own tools, security constraints, and standards for data movement. Augmented Data Quality provides a way to establish visibility across that fragmentation, allowing detection and remediation to occur within a unified framework while respecting local constraints.

In practice, that means a single lens on quality across sources whether the data is in legacy mainframe stacks, databases, warehouses, or lakes.

Making Data AI-Ready

AI readiness requires more than clean data. It demands confidence in where data comes from, how it has changed, and whether it reflects real-world truth. Explainability and metadata around the data is non-negotiable to build this trust in data as it goes through many transformations. Training models on inaccurate or biased inputs introduces risk that automation can amplify exponentially.

AI readiness introduces requirements that traditional analytics don’t demand:

- Provenance for explainability: When a credit model denies an application, regulators require traceability to source data. ‘Our model said so’ isn’t sufficient.

- Drift detection in real-time: Traditional BI tolerates minor data shifts. GenAI models degrade rapidly when training distributions diverge from production inputs.

- Bias visibility across the pipeline: A column that’s ‘clean’ for reporting can still encode bias. For example, customer sentiment analysis trained on support tickets may systematically misunderstand satisfaction levels—frustrated customers often call, while satisfied ones use self-service portals. The sentiment score is technically accurate but reflects channel selection bias, not true customer sentiment.

Most DQ frameworks weren’t designed with these requirements in mind. Retrofitting them is possible, but resource intensive. Augmented Data Quality supports AI readiness by maintaining provenance and ensuring that data used for model development and decisioning is explainable and verifiable.

AI-ready data is governed data. Consistent, interpretable, and suitable for automated reasoning.

Building Proactive Trust

Proactive data quality isn’t about more control. It’s about visibility and confidence at every stage of the data lifecycle.

When business and technical teams share accountability for quality, trust becomes measurable. Data leaders can demonstrate compliance with clear evidence of prevention, not just remediation. Analysts gain confidence to act on insights. Executives make decisions faster.

Technology alone can’t fix data quality. Automation can surface anomalies at scale, but only humans can apply business logic and context to determine whether those anomalies matter and why they occur. True augmentation happens when automation accelerates detection and human context guides interpretation. That partnership creates proactive trust and changes data quality from a back-office function to an enterprise capability that supports growth, innovation, and AI adoption.

What Good Looks Like

Leading enterprises demonstrate what augmented data quality can achieve:

- A global investment management firm realized 18x ROI in its first year, scaling to more than 20,000 rules with 96% automation.

- A financial institution reduced financial reconciliation efforts by 95%, managing $250 billion in assets with near-complete automation for 500 investment ops professionals.

- Another enterprise achieved 90% coverage on day one, engaging more than 50 business users to co-own data quality alongside the data ops team.

Each of these examples underscores the same principle: automated where possible, contextual where necessary, governed throughout.

From Firefighting to Foresight

CDOs who succeed in this new environment focus on foresight rather than response. They connect quality metrics to business impact, use automation to augment manual work, and make trusted data the foundation of AI initiatives.

Data foresight isn’t abstract. It’s the ability to predict where issues will occur and prevent them before they disrupt operations or erode trust. With augmented data quality, foresight becomes a measurable discipline.

Ask your team: Where are we spending the most time on reactive fixes? Which systems would we never use for AI training because we don’t trust the data? Those answers reveal where foresight is needed most.

Enterprises prepared in this way move faster, comply easier, and deliver insight with confidence.

Schedule a demo to see how Qualytics can automate 95% of your data quality rules so your data teams can focus on what really matters: building trust that scales.