Preparing Financial Data for AI: A Practical Playbook for De-Risking Data Quality in Financial Institutions

Few industries experience the consequences of bad data as acutely as financial services. When errors propagate across interconnected systems that power credit decisions, claims payouts, portfolio valuations, liquidity reporting, it’s felt across the business. With AI increasingly embedded into these workflows, that propagation now happens at machine speed. A single upstream data error can flow into automated decisioning, analytics, or reporting pipelines and influence thousands of outcomes before anyone detects it.

For banks, insurers, and asset managers facing regulatory scrutiny and AI adoption pressures, discovering issues downstream is more than inefficient. It erodes trust, increases model risk, and introduces operational fragility at a time when regulators expect transparency, accountability, and resilience.

This playbook is written to help data leaders in financial institutions modernize under real-world constraints. It explains why proactive data quality is now foundational for AI and outlines how to build a program that reduces risk while still enabling the business to move faster.

AI Raises the Stakes for Data Quality in Financial Services

Financial institutions have always depended on data quality to make sound decisions, meet regulatory obligations, and maintain confidence with customers, counterparties, and supervisors. What changes with AI is the scale and speed at which data issues translate into real-world impact.

When a model ingests an inaccurate value—an outdated product code, a mislabeled exposure, a missing claims attribute—it doesn’t produce one flawed result. It repeats the mistake, consistently and at scale. What might once have been a contained issue on a BI report becomes a systemic risk when algorithmic decisions replicate the error thousands of times. That’s why executive concern is rising. Nearly three-quarters of leaders say they are unhappy with their data quality, and almost half admit to making decisions based on inaccurate data in the past six months.

Beyond internal risk, these failures increasingly affect customer relationships. According to Accenture’s global banking consumer study, trust and advocacy are primary drivers of growth, yet they are fragile. Inconsistent decisions, incorrect pricing, delayed claims, or unexplained changes in outcomes all undermine confidence. When AI-driven systems behave unpredictably due to poor data quality, customers experience it not as a data problem, but as a breach of trust.

Why Proactive Data Quality Is Now Table Stakes

AI readiness in financial services is often framed as a modeling challenge. In practice, it is a data supply chain problem.

Most institutions operate across highly heterogeneous environments. Core banking systems, policy administration platforms, trading engines, CRMs, and cloud warehouses all speak different dialects. Data teams spend enormous effort keeping these systems aligned. Third-party data compounds the challenge, introducing constant variability, schema drift, and reconciliation overhead.

One global asset manager using Qualytics was reconciling data from dozens of sources and thousands of weekly files across a $250B portfolio. After implementing proactive data quality, reconciliation efforts dropped by 95%. The environment did not become simpler; issue detection was automated resulting in faster and more consistent resolution.

Regulatory expectations reinforce the need for this shift. Frameworks such as BCBS 239, NAIC guidance, and OCC model risk management all emphasize the same core principle: institutions must understand where data comes from, how it changes, and who is accountable. AI governance builds directly on that foundation. Supervisors may tolerate imperfect models, but they will not tolerate opaque or untrusted inputs.

Reactive data quality is increasingly unacceptable. The cost is too high, and the tolerance for after-the-fact explanations is shrinking.

How These Data Quality Risks Show Up Across Financial Services

While the underlying challenges of data quality are shared across industries, they manifest differently across financial services segments.

For banks, the risk concentrates around model reliability and regulatory reporting accuracy. Small data issues can quickly turn into model risk management (MRM) failures when flawed inputs feed credit, fraud, or stress testing models. As Basel IV reporting and supervisory scrutiny increase, data aggregation lineage and traceability are no longer “nice to have.” Banks need confidence that the data powering regulatory submissions and AI-driven decisions is consistent, explainable, and auditable.

For alternative asset managers, the pressure shows up in reconciliation and reporting. Fund-level data arrives from dozens of administrators, portfolio companies, and third-party providers, often via manual, Excel-heavy processes. Inconsistent inputs make LP reporting harder to trust and portfolio-level risk aggregation harder to scale. Without proactive controls, teams spend more time reconciling numbers than analyzing them.

For insurers, data quality risk often surfaces in underwriting and claims automation. Models depend on historical attributes that may drift over time, while claims pipelines pull from multiple legacy systems that were never designed to work together. At the same time, NAIC and IFRS reporting expectations require consistency and transparency across those systems. When data issues slip through, the result is risk leakage, manual rework, and delayed insight.

Across all three segments, the pattern is the same: once bad data reaches models, reports, or regulators, the cost of correction multiplies.

A Practical Playbook for De-Risking Data Quality

Establish Centralized Oversight and Clear Ownership

Proactive data quality starts with visibility. Financial institutions need a unified view of the data flowing through critical domains (credit, claims, trades, policies, payments, market data) along with clear ownership across business and technical teams.

Strong programs organize data by business domain, define ownership jointly, and maintain quality from raw inputs through analytical features and regulatory submissions. When accountability is explicit, data quality improves, ambiguity decreases, and people know what they own and what “good” looks like.

Build Data Foundations That Can Absorb Change

AI workloads demand architectures that support scale, schema evolution, and metadata capture without constant breakage. Legacy, batch-oriented systems delay change detection and let quality issues slip downstream.

Modern foundations allow schemas to evolve without silently breaking reports, handle high volumes with predictable performance, and capture the metadata needed for automated rule management. They also enable time-series validation on financial KPIs, exposures, and loss ratios. When data foundations are designed to accommodate schema evolution, volume shifts, and changing business definitions—rather than assuming static structures—data quality becomes far easier to manage.

Turn Governance into an Operating Control

In high-performing financial institutions, governance is not a static inventory or a policy exercise. It’s a living control system.

Critical data elements that feed high-risk decisions and models are explicitly identified and treated as first-class assets. Validation checks are documented, continuously monitored, and tied to clear escalation paths when drift occurs. Just as importantly, business teams participate directly in determining which anomalies matter and which don’t, ensuring controls reflect real operational risk rather than theoretical thresholds. In this model, proactive data quality turns governance into something enforceable, testable, and auditable in day-to-day operations.

Combine Automation with Human Judgment

Automation is what makes proactive data quality sustainable at enterprise scale, but automation alone is not enough. Leading institutions start with broad, automated coverage and refine it with domain expertise.

One global investment manager implemented more than 20,000 data quality rules with Qualytics, most of them automatically generated, and achieved an estimated 18× ROI in the first year. AI-suggested rules surfaced relationships and outliers that manual rules rarely catch, while underwriting, finance, actuarial, and portfolio teams tuned thresholds based on business context.

The result is a system that improves over time instead of going stale.

Use Quality Gates to Protect Models and Reports

Quality gates act as pre-flight checks for data. They ensure flawed inputs never reach underwriting models, pricing engines, risk calculations, or regulatory submissions.

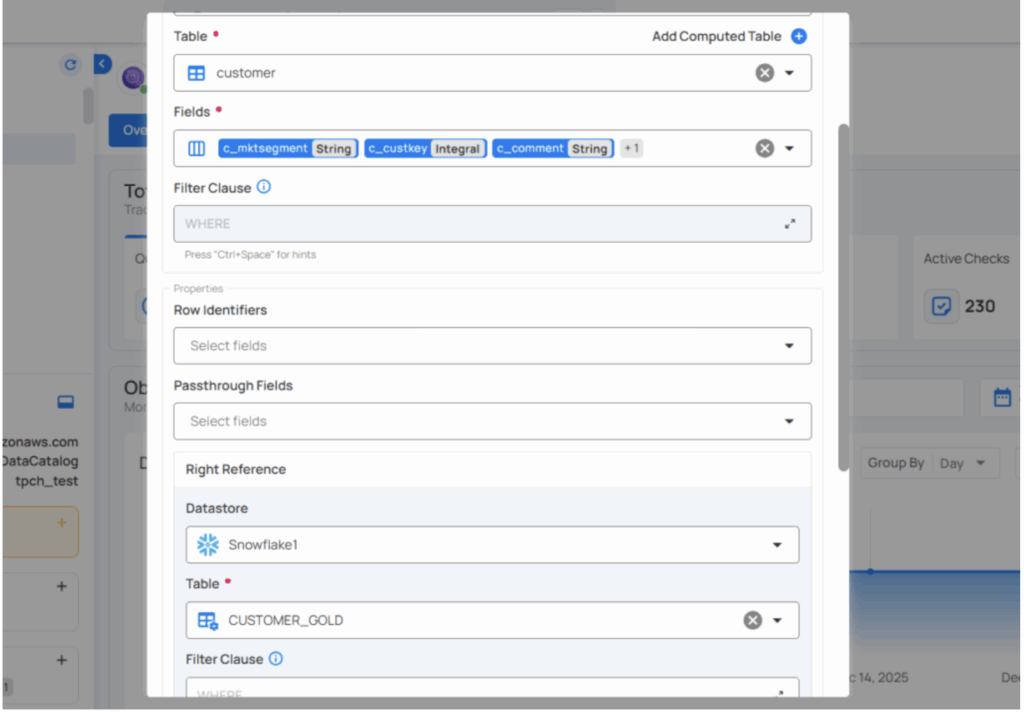

High-performing teams validate third-party data before ingestion, detect schema drift before features change, reconcile systems of record with analytical stores, and monitor time-series behavior on critical metrics.

One asset manager moved from quarterly to weekly reconciliation across tens of thousands of files after automating these controls, without adding headcount.

Stable inputs slow model drift. Prevention reduces downstream rework.

Make Data Quality a Shared Responsibility

Data quality cannot live exclusively with data teams. Financial institutions succeed when domain experts actively shape the system.

Shared ownership works because each group plays a distinct role. Business teams define acceptable ranges and evolve them as products, markets, and assumptions change, while data engineers operationalize those expectations in production systems without becoming bottlenecks. Governance teams maintain policies, processes, and ownership across domains, so issues are routed to people who understand both the data and its business meaning. The result is faster resolution, clearer accountability, and a system that scales without centralizing every decision.

Embed Data Quality Controls into AI Governance, Privacy, and Security

AI governance frameworks consistently identify data quality, security, and privacy as interdependent control domains. Financial institutions must ensure sensitive data is continuously monitored to prevent unintended exposure. Any exposure must be documented with root cause and downstream impact analysis to support regulatory examination and auditability. When data anomalies involve PII or other sensitive attributes, escalation must be immediate, governed, and fully auditable.

Trusted AI depends on trusted, well-controlled data.

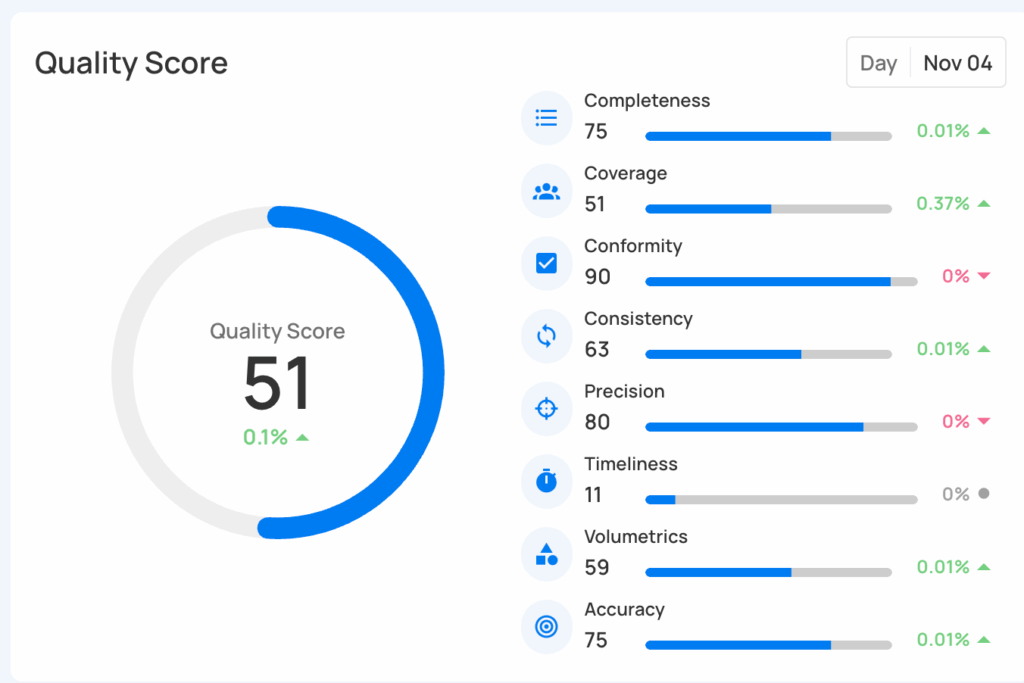

What Proactive Data Quality Enables

When data quality is proactive, automated, and explainable, the benefits extend well beyond risk mitigation to measurable revenue and cost outcomes. Issues are detected earlier in the data lifecycle, reducing operational risk, limiting downstream rework, and lowering the cost of correction. Well-defined, continuously enforced data quality controls increase regulatory confidence, while AI initiatives move faster and perform more reliably because models are trained on stable, continuously monitored inputs. Reporting cycles shorten, pricing and reserving decisions improve, revenue leakage is reduced, and teams spend less time reacting to issues that can be resolved faster through proactive controls.

Most importantly, data quality becomes a strategic enabler rather than a compliance checkbox.

The Path Forward

AI is reshaping financial services. But it is only as safe, reliable, and valuable as the data that powers it.But it cannot be safer or more valuable than the data that powers it.

Institutions that invest in proactive data quality today are not just reducing risk. They are building a durable advantage of greater trust, greater speed, and greater readiness for an AI-driven future.

Explore how Qualytics helps financial institutions strengthen data quality for AI readiness.