Scaling data quality is essential for making accurate decisions based on reliable data. This article explains how to manage data quality at scale with key strategies and practical steps, helping you maintain data integrity as your data grows.

Key Takeaways

- Data quality management is essential for informed decision-making and requires a structured strategy that addresses completeness, coverage, conformity, consistency, precision, timeliness, volumetrics and accuracy throughout the data lifecycle.

- Establishing a robust data governance framework is critical for ensuring accountability and standardization in data management, which helps in overcoming challenges such as data silos and human error.

- Leveraging technology, including AI and data quality tools, enhances the scalability and efficiency of data quality processes, enabling organizations to maintain high standards and simplify monitoring and cleansing efforts.

Understanding Data Quality at Scale

Data quality management is vital for success in the digital age and should be a strategic priority. High-quality data leads to better decisions based on accurate analytics rather than gut feelings.

To maintain a competitive edge and achieve long-term success, organizations need their data to achieve: completeness, coverage, conformity, consistency, precision, timeliness, volumetrics and accuracy

Poor data quality can result in significant financial losses and missed opportunities, impacting overall business performance.

E-commerce companies, for example, often struggle with scaling tailored customer experiences due to inadequate data quality. Inaccurate or incomplete data can lead to incorrect conclusions and decisions, negatively affecting financial performance. An effective data quality management framework should cover the entire data lifecycle, ensuring that high-quality data guides business direction and enhances operational efficiency.

To achieve this, organizations need a structured approach with defined steps and processes. A robust data quality strategy ensures accuracy, reliability, and value, while effective data governance facilitates decision-making based on accurate data. High data quality helps businesses make sense of their data, ensuring that it is appropriate for its intended purpose.

Next, we’ll explore the key aspects of understanding data quality at scale: defining data quality, challenges in scaling it, and the importance of a data governance framework.

Defining Data Quality

High-quality data is marked by:

- Completeness

- Coverage

- Conformity

- Consistency

- Precision

- Timeliness

- Volumetrics

- Accuracy

Examples of high-quality data include correct names, addresses, and contact details, all of which are crucial for making informed business decisions.

Maintaining the trustworthiness of your data throughout its lifecycle is the essence of data integrity. When poor-quality data enters the picture, it can undermine your analysis and erode confidence in your entire data ecosystem. Faulty data doesn’t just lead to poor decisions—it creates skepticism around future insights. That’s why accuracy (getting the facts right) and consistency (keeping everything aligned) are critical.

However, true data quality goes beyond that. It also requires completeness (ensuring no vital information is missing) and coverage (capturing all necessary data points). Conformity ensures your data adheres to standard formats, while precision ensures the details are as granular as needed for reliable analysis.

Equally important is timeliness—decisions are only as good as how current your data is. And volumetrics help you monitor the size and flow of data to spot any anomalies or gaps. By setting up regular refresh protocols and routinely evaluating these aspects, you can ensure your data remains high-quality, enabling better, more confident decision-making.

Challenges in Scaling Data Quality

Whether an organization is utilizing a modern or legacy data stack, there are varying degrees of data quality challenges and how to scale them. Data operations are often complex; siloed data from transactional systems are often brought into a centralized data framework (Data Warehouse / Data Lake) through ETL/ELT methods, then data pipelines are utilized to transform that data further into usable formats.

Data generators and data consumers are often from different parts of the organization. These silos lead to inefficiencies and lack of transparency for data’s fit for use and known idiosyncrasies that are not apparent to a data consumer. Without a full perspective of data cataloged and documented with additional metadata, overall data quality is significantly impacted.

Human error during data entry significantly contributes to inaccuracies, adversely affecting data quality. Address data, for instance, is often prone to errors due to manual entry, further impacting overall data quality. Lack of standardized formats and conventions leads to inconsistencies and inefficiencies in data management, making it challenging to ensure high-quality data across the organization.

Misunderstandings, typos, use of abbreviations, and varying formats commonly cause issues in data matching, contributing to data quality challenges. Organizations must address these challenges by implementing standardized processes to improve data quality and ensure consistent, reliable data for making informed decisions.

And lastly yet most importantly, the manual and reactive status quo of writing data quality rules after an anomaly arises does not scale. Organizations need to prioritize proactive measures of managing data quality in order to scale their data governance and data quality frameworks.

Importance of Data Governance Framework

Effective data governance is crucial for managing data quality, ensuring accountability and oversight. Strong data governance frameworks help standardize and improve data quality across organizations. Key components of a robust data governance framework include defining data management rules, policies, and quality standards.

Roles in a data governance framework encompass data owners, data stewards, and data custodians, all essential for maintaining data integrity. Clear data governance policies ensure the accountability needed for effective data management. A robust data quality strategy includes governance policies that oversee data management, enhancing overall data quality.

Poor data governance considerably weakens accountability, which in turn hampers effective data quality initiatives. A structured framework is necessary for implementing effective data quality management and securing data integrity. Standardized processes through governance frameworks are essential for improving overall data quality.

Key Elements of a Scalable Data Quality Strategy

Data integrity and reliability are essential for informed business decisions and minimizing financial losses. Data quality is vital for successful business operations, influencing overall effectiveness and efficiency. Organizations must develop a scalable data quality strategy that encompasses people, processes, and technology to turn the tide on data quality issues.

A strong data quality strategy includes processes for accurate data classification, which contributes to improved data security. Maintaining high-quality data at scale requires more than just simple monitoring; it necessitates strategic approaches. Enterprise data teams must actively manage and evolve their data quality management practices to ensure operational efficiencies and strategic advantages in the market.

In the following subsections, we will explore the key elements of a scalable data quality strategy, including establishing data ownership and accountability, implementing data quality metrics, and continuous data profiling and assessment.

Establishing Data Ownership and Accountability

Data ownership assigns individuals or teams the responsibility to ensure the accuracy and integrity of data sets. Appointing data stewards ensures clear accountability and monitoring for data quality improvement. Data stewards are responsible for assessing data quality, improving review procedures, and overseeing governance, contributing to a culture of data stewardship where teams actively maintain quality.

A data governance framework should outline data policies, standards, KPIs, and data elements to address. Clear accountability fosters a culture of data stewardship, ensuring data remains accurate and reliable throughout its lifecycle.

Implementing Data Quality Metrics

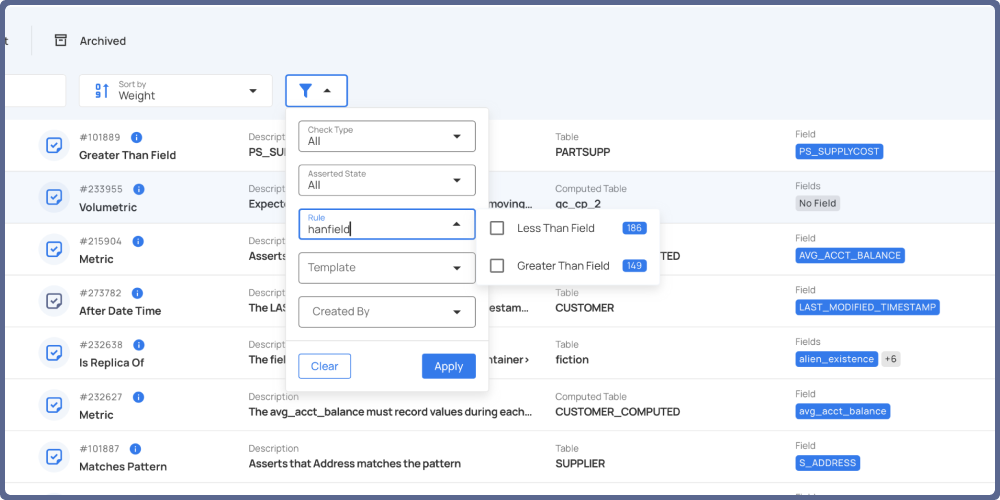

Defining data quality metrics establishes measurable criteria for evaluating data quality. Data quality Key Performance Indicators (KPIs) should be linked to general KPIs for business performance, helping organizations identify trends and areas of improvement. Monitoring these metrics allows organizations to measure data accuracy, completeness, and consistency, ensuring that data quality remains high.

Data profiling helps identify patterns, anomalies, and errors that impact data quality, providing valuable insights for measuring data quality KPIs. Data quality dashboards serve to visualize key metrics, enabling quick identification of issues and facilitating timely corrective actions.

Continuous Data Profiling and Assessment

Data profiling is a thorough examination of data to understand its characteristics and quality, allowing organizations to analyze existing data and summarize it. Regular data profiling should be conducted as an ongoing process to identify patterns and issues that affect data quality across the organization.

Data profiling tools provide insights that identify anomalies, outliers, and patterns in data, thereby measuring data integrity and supporting the understanding of data assets.

Challenges in data profiling typically arise due to its complex and time-consuming nature, making consistency and regular reviews necessary to ensure effective quality management.

Techniques for Maintaining Data Integrity

Data integrity refers to maintaining consistent relationships between entities and attributes. Implementing robust data integrity practices is essential to safeguard against breaches and ensure accurate data for decision-making. Validation rules and automated checks can be used to ensure data validity, providing a structured approach for effective data quality management.

Regular assessments help identify any lapses in data quality and ensure corrective actions are promptly taken. To maintain data integrity, organizations should employ various techniques, including data standardization, automated data cleansing, and validation and verification processes.

Data Standardization Practices

Data entry inconsistencies can frequently occur. This often stems from differences in data formats, definitions, or standards. Standardizing data formats eliminates discrepancies, ensuring consistent data across systems. Using standardized value formats, such as uniform street names, can significantly improve data matching. Implementing standardized address formats and validation checks can ensure consistency in address data, contributing to overall data quality.

Clear guidelines for data entry are essential for maintaining data conformity across the organization. By establishing and adhering to data standardization practices, organizations can prevent data quality issues and ensure high-quality data across all systems and processes.

Automated Data Cleansing

Data cleansing tools identify and rectify errors, eliminating inconsistencies for improved accuracy. The purpose of data cleansing is to examine and clean data for mistakes, duplicates, and inconsistencies, enhancing data accuracy and completeness. Automation in data cleansing is critical for identifying and rectifying errors before they affect data quality, streamlining the cleansing process.

Tools and algorithms for duplicate detection and resolution are essential for maintaining clean data. Automated tools can also help verify the accuracy of address data after it has been entered, ensuring reliable data for decision-making.

Data Validation and Verification

Data validation involves checking for adherence to defined rules and formats to maintain data integrity. Regular audits and validation checks are essential for maintaining high data quality and ensuring compliance with quality standards. Data profiling is critical for measuring and assessing data quality metrics, providing insights into data accuracy and consistency.

Periodic audits enhance data reliability by uncovering discrepancies and ensuring compliance. Organizations must establish protocols for regular data validation and verification, ensuring that data remains accurate, consistent, and reliable throughout its lifecycle.

Leveraging Technology for Data Quality Management

Technology solutions that utilize AI methods can significantly enhance the efficiency of managing data quality at scale. Data quality tools and master data management systems are crucial for automating and maintaining consistency in data quality efforts. Automating data quality checks can significantly reduce errors and improve employee productivity, ensuring that data quality remains high.

Consistent data quality tracking helps identify and address unknown anomalies effectively. Continuous monitoring helps in identifying data quality issues before they escalate, ensuring that data remains reliable and accurate.

In the following subsections, we will explore the various technologies that can be leveraged for data quality management, including data quality tools and platforms, the role of machine learning and AI, and data observability solutions.

Data Quality Tools and Platforms

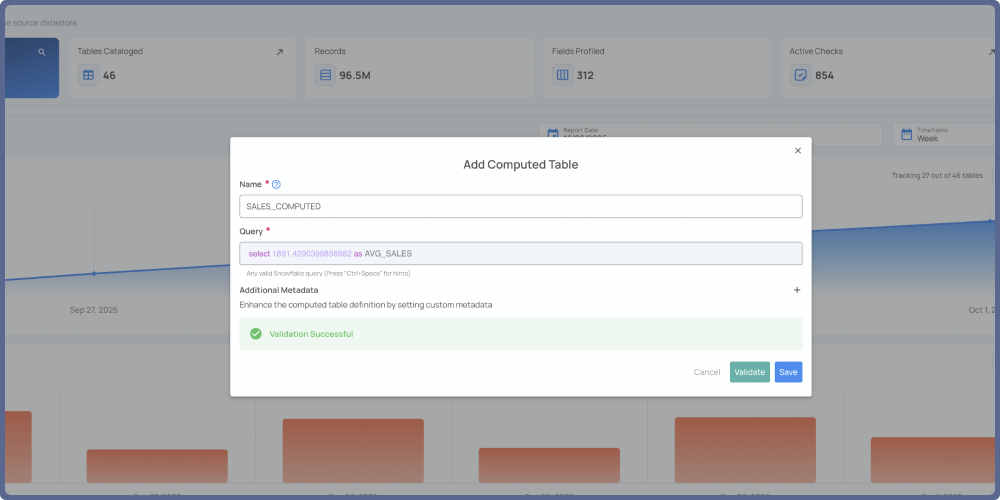

Enterprise data quality tools monitor, manage, and improve data quality across various systems. These tools ensure data accuracy, consistency, reliability, and compliance with industry standards. Data validation tools check incoming data against predefined rules, including format checks, range checks, and cross-field validation, enhancing overall enterprise data quality management.

Data Quality tools are stronger when coupled with other data governance platforms – namely Data Catalogs and Master Data Management (MDM) tools. Data Catalogs provide a central location for maintaining metadata and business glossaries about data assets, while MDMtools focus on creating and managing a single, authoritative source of master data to maintain consistency and accuracy.

Selecting the right data quality tools depends on the unique challenges and objectives faced by an organization. For instance, a company such as JetBlue may manage vast amounts of data from bookings, pricing, financials, to customer survey data, whereas a company such as Blackstone may manage commercial real estate data as well as alternative asset financials. Managing such scale without the necessary infrastructure would be impossible for such enterprises.

Role of Machine Learning and AI

Automating the data profiling process allows teams to focus on analysis and decision-making, significantly improving efficiency. AI and ML technologies can automate the definition of rules based on historic data’s shapes and patterns, ultimately automating the detection and correction of anomalies, ensuring that data remains accurate and reliable. These technologies play a crucial role in automating data quality tasks, reducing the manual effort required and allowing organizations to scale their data quality efforts effectively.

By leveraging AI and machine learning, organizations can proactively address data quality issues, ensuring that data remains a valuable asset for strategic decision-making. These technologies not only help identify anomalies but also predict potential data quality issues, enabling preemptive actions to maintain high data quality standards.

Data Observability Solutions

Data observability solutions enable organizations to gain insights into the health, performance, and reliability of their data in a black-box fashion. Ultimately, Data Observability focuses on deliverability, timeliness, volumetrics and lineage of data, whereas Data Quality solutions focus on the quality of the data in a deeper fashion. Think about it like looking at whether water pipes are functional versus assessing the quality of the water flowing through them. These solutions provide visibility into data lineage, dependencies, and transformations, enhancing overall data understanding. Real-time monitoring of data quality enables swift identification and resolution of issues, ensuring data remains accurate and reliable.

Best Practices for Scalability

To ensure that data quality management can scale effectively, organizations must adopt best practices that encompass centralized governance, regular training, and a data-driven culture. These practices not only enhance operational efficiency but also maximize the value derived from high-quality data.

Higher data quality saves time and money and helps avoid regulatory fines, highlighting the importance of maintaining consistent data quality standards.

Centralized Data Governance

A comprehensive data governance framework should include data policies, data standards, defined roles, and a business glossary to align data management processes. The centralized data team at Fox Networks, for example, is responsible for critical areas such as data ingestion, security, and optimization, ensuring streamlined access and oversight. Accelerating improvements in data quality, accuracy, coverage, and efficiency is achievable through a centralized governance approach.

Centralized data governance ensures data consistency and integrity across the organization. By having a unified framework, organizations can streamline their data management processes, ensuring that data remains accurate, reliable, and secure.

Regular Training and Education Programs

Training is crucial for ensuring data quality. Education contributes significantly to the reliability of data. Effective training can include seminars, e-learning courses, and hands-on training sessions, ensuring that employees are well-versed in data quality methodologies and best practices. Establishing feedback mechanisms ensures ongoing refinement of data quality practices based on stakeholder input, fostering a culture of continuous improvement.

A successful data quality plan should integrate data quality methodologies into corporate applications and business processes. Implementing feedback loops encourages ongoing evaluation and adaptation of data quality practices based on stakeholder insights, ensuring that data quality remains a top priority across the organization.

Building a Data-Driven Culture

Clearly defining data quality and specific metrics is essential for aligning everyone in the organization on data quality goals. Encouraging user empowerment in identifying and resolving data quality issues fosters a proactive data quality culture. Implementing feedback mechanisms fosters a culture of ongoing data quality enhancement, ensuring that data remains a valuable asset for the organization.

Building a data-driven culture empowers employees to proactively address data quality issues, ensuring that data remains accurate, reliable, and valuable for making informed business decisions. This cultural shift is crucial for maintaining high data quality standards and driving operational excellence across the organization.

Monitoring and Measuring Data Quality

Continuous monitoring is crucial in data quality management, ensuring standards are upheld and data remains reliable. Outdated or poor-quality data can lead to obsolete insights, missed opportunities, and incorrect applications of knowledge, significantly affecting decision-making. Timely data availability enhances its value and helps inform decisions regarding stock levels, market trends, and customer preferences, emphasizing the need for stakeholder engagement.

Creating feedback loops and establishing data quality KPIs enhances continuous improvement, while regular communication about data governance policies is essential. In the following subsections, we will explore the importance of setting up data quality dashboards, regular audits and reviews, and feedback loops for continuous improvement.

Setting Up Data Quality Dashboards

Data must be of good quality and easily available to various consumers at point of analysis. Data profiling tools help organizations understand the overall health of their data, providing valuable insights into data quality metrics, trends, and areas requiring attention. A data quality dashboard is beneficial for organizations focusing on data quality, providing visualization and reporting for monitoring data quality and exposing additional quality KPIs about data at point of analysis to make better-informed decisions.

By setting up data quality dashboards, organizations can quickly identify and address data quality issues, ensuring that data remains accurate, reliable, and valuable for making informed business decisions.

Regular Audits and Reviews

Regular audits proactively identify and rectify data quality issues before they escalate. Implementing an audit strategy is critical to maintaining high levels of data integrity across the organization. Audits should focus on high-risk areas where data quality issues are more likely to occur, ensuring effective monitoring and consistent

evaluation of data quality.

Utilizing data profiling tools during audits can enhance the accuracy of the findings, while engaging cross-functional teams fosters a comprehensive understanding of data quality across departments. Findings from audits should be analyzed and used to implement corrective actions and updates to data management practices, ensuring that data quality remains high.

Feedback Loops for Continuous Improvement

Continuous feedback loops in data quality management allow organizations to identify and rectify issues proactively. Implementing feedback mechanisms is essential for creating a responsive data quality environment, involving automated alerts, regular reporting, and stakeholder input to monitor data quality.

Effective implementation of feedback mechanisms ensures all relevant stakeholders are engaged and informed about data quality issues. Utilizing data quality metrics allows organizations to quantify the effectiveness of the feedback loops and make informed decisions.

Regular assessment of data quality metrics can highlight trends and areas needing improvement, ensuring that iterative improvements based on feedback are continuously implemented.

Summary

In summary, mastering data integrity and managing data quality at scale requires a comprehensive approach that includes defining data quality, overcoming challenges, leveraging technology, and adopting best practices. By implementing a robust data quality strategy, organizations can ensure that their data remains accurate, reliable, and valuable for making informed business decisions. Embracing a data-driven culture and continuously improving data quality practices will enable organizations to thrive in the digital age, turning data into a strategic asset that drives operational excellence.

Frequently Asked Questions

What are the key components of a data governance framework?

A robust data governance framework should encompass data management rules, policies, quality standards, and clearly defined roles like data owners, stewards, and custodians. These elements ensure accountability and effective oversight of data assets.

How can AI and ML improve data quality management?

AI and ML enhance data quality management by automating the detection and correction of anomalies, significantly reducing manual effort. This allows organizations to scale their data quality initiatives more efficiently.

Why is regular data profiling important?

Regular data profiling is essential as it uncovers patterns and anomalies that can compromise data quality, thereby maintaining accuracy and consistency. This proactive approach ensures your data remains reliable for informed decision-making.

What role do data quality dashboards play in managing data quality?

Data quality dashboards play a crucial role in managing data quality by offering visual insights into metrics and trends, which facilitate prompt identification and resolution of issues. This proactive approach ensures that data remains reliable and actionable.

How can organizations build a data-driven culture?

To build a data-driven culture, organizations should establish clear data quality goals, empower employees to address data issues, and create feedback mechanisms for continuous improvement. This approach encourages accountability and engagement among staff, leading to a more data-centric environment.